A gaming website gives users the ability to trade game items with each other on the platform. The platform requires both users' records to be updated and persisted in one transaction. If any update fails, the transaction must roll back.

Which AWS solution can provide the transactional capability that is required for this feature?

A. Amazon DynamoDB with operations made with the Consistent Read parameter set to true

B. Amazon ElastiCache for Memcached with operations made within a transaction block

C. Amazon DynamoDB with reads and writes made by using Transact* operations

D. Amazon Aurora MySQL with operations made within a transaction block

E. Amazon Athena with operations made within a transaction block

Answer: C

✅ Explanation

-The scenario requires atomic transactions — either both users' records are updated successfully, or none of them are. Let's analyze the options:

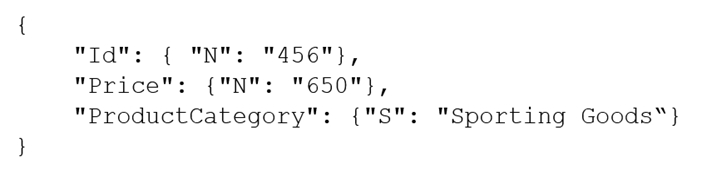

✅ C. Amazon DynamoDB with reads and writes made by using Transact operations*

Correct.

-DynamoDB supports ACID transactions using TransactWriteItems and TransactGetItems.

-These Transact* operations ensure all-or-nothing semantics, which is exactly what's required.

-This is ideal for serverless, highly scalable applications like gaming platforms.

❌ A. Amazon DynamoDB with operations made with the Consistent Read parameter set to true

Incorrect.

Consistent Read ensures the latest data is read but does not provide transactional safety for writes.

Does not prevent partial writes or failed updates.

❌ B. Amazon ElastiCache for Memcached with operations made within a transaction block

Incorrect.

Memcached does not support transactions.

ElastiCache is a caching layer, not meant for durable transactional data.

✅ D. Amazon Aurora MySQL with operations made within a transaction block

Also correct, but not the best answer in a serverless/cloud-native context like a gaming platform.

Aurora supports SQL transactions, so it can provide transactional consistency.

However, compared to DynamoDB, Aurora requires more management and is less scalable for high-volume, real-time use cases like item trading in games.

❌ E. Amazon Athena with operations made within a transaction block

Incorrect.

Athena is a query service for S3-based data lakes.

It is not meant for transactional updates — it reads data, doesn't write/modify it in real-time.

Final Answer:

✅ C. Amazon DynamoDB with reads and writes made by using Transact operations*